Advertisement

There is a quiet but growing shift in how data is created. Not gathered, not collected, but made—on purpose, for a reason. Until recently, training data was the kind of thing you hunted down in public datasets or scraped from the web. Now, we can create it ourselves just by describing what we want.

That’s what the new synthetic data generator does: it turns natural language into structured, useful datasets. No code, no crawling. Just tell it what you need. This changes how we think about data, especially when real data is sensitive, scarce, or hard to get.

Traditional data collection is messy. It involves permissions, formatting, cleaning, balancing, and sometimes guessing. It often takes longer to prepare the dataset than to train the model. However, with a synthetic data generator, the process is reversed. The tool uses large language models to understand your intent in plain English, then creates rows of data that fit your description. You might say, "Generate 1,000 examples of customer support queries with varied tones and issues," and get a complete, usable table. It understands structure, context, and variation. That ability to take a high-level request and turn it into detailed examples is a huge step forward.

It’s not just about speed. A synthetic data generator lets you shape datasets the way you need. If you’re building a classifier for medical records but can’t use real patient data due to privacy rules, you can simulate realistic cases instead. You can control the proportions, inject rare cases, and even design edge scenarios that rarely appear in organic data. And because you write your needs in natural language, there’s no barrier for non-developers. A product manager or a domain expert can drive the dataset creation without needing a background in data engineering.

Some types of data are hard to access, others are biased or incomplete. If you train a chatbot only on complaints, it might struggle with praise. If you build a fraud detector from only clean transactions, it won’t recognize clever scams. The problem isn’t always the quantity of data—it’s the gaps. Synthetic data helps you fill them. You can write a request for specific types of examples that are missing in your training set. You can even fine-tune how realistic or extreme the generated data should be.

The synthetic data generator is not meant to replace real-world data entirely. It’s a supplement. It fills blind spots, helps with cold starts, and ensures balance across categories. One example is intent recognition. If you want a voice assistant to handle dozens of request types, you need examples of each. But some intents won’t show up often in real logs. You can use synthetic data to balance those categories so your model doesn't overfit in the most common cases. It's also useful for simulating mistakes—typos, accents, informal phrasing—that people use in the real world but don't show up in polished datasets.

The synthetic data generator doesn’t just spit out random values. It builds based on context. When you describe the dataset you need, the model interprets that request and then crafts entries that make sense together. If you ask for a customer feedback table with fields like "Issue Type," "Sentiment," and "Resolution Time," it understands how these elements relate. It won't just put "Password Reset" next to a negative sentiment every time. It can vary tones, simulate delay patterns, and mimic realistic interactions. If you refine your request, it can regenerate the set with your changes quickly and without hassle.

This tool is grounded in natural language processing. That means it understands how people describe situations, outcomes, and expectations. If you say, “Make 20% of the records show a failed login attempt followed by an angry message,” it knows how to follow those instructions. It applies probability, pattern recognition, and controlled randomness to ensure your dataset isn’t too repetitive. This leads to better generalization during model training.

There's also control over scale. You can ask for ten examples to test an idea or ten thousand to train a model. The generator doesn't slow down as complexity rises. It's built on models that already understand broad knowledge, so it can simulate industries, demographics, or product categories, even if your prompt is vague. That means you can prototype faster and improve quality through iterations.

No tool is flawless. Synthetic data is still artificial. If your prompt is too vague or limited, the output may reflect that. It also lacks the randomness found in real-world behavior. Patterns that naturally appear in user data might not show up unless you specifically request them. While the generator can mimic tone and structure well, it may still produce outliers that need review. It's smart but not flawless. You need to be clear in what you ask and test whether the generated data works for your model.

Still, the tool has clear value. It makes machine learning more accessible. Teams can move faster, explore more ideas, and avoid the risks tied to real data. It also makes learning and experimentation safer. Students can train models without privacy concerns. Small teams can build early versions of products without a trove of past data.

One key strength is rare event simulation. For things like cybersecurity threats, insurance cases, or emergency incidents, real data is limited. A synthetic data generator helps you create those examples, understand rare behavior, and improve system readiness. You control the data, not the other way around.

The synthetic data generator makes building datasets faster and easier. You can describe what you need in natural language, and the tool creates structured data to match. It’s useful for training models, filling data gaps, and handling sensitive use cases without real user data. While it doesn’t replace real-world data, it adds flexibility and control. For developers, students, and small teams, it offers a practical way to move forward without waiting on hard-to-find or restricted datasets. It shifts who can build data and how.

Advertisement

Curious about how Snapchat My AI vs. Bing Chat AI on Skype compares? This detailed breakdown shows 8 differences, from tone and features to privacy and real-time results

What Large Language Models (LLMs) are, how they work, and their impact on AI technologies. Learn about their applications, challenges, and future potential in natural language processing

Argilla 2.4 transforms how datasets are built for fine-tuning and evaluation by offering a no-code interface fully integrated with the Hugging Face Hub

How a synthetic data generator can help you build training datasets using natural language. Speed up your AI development without writing code or using sensitive real-world data

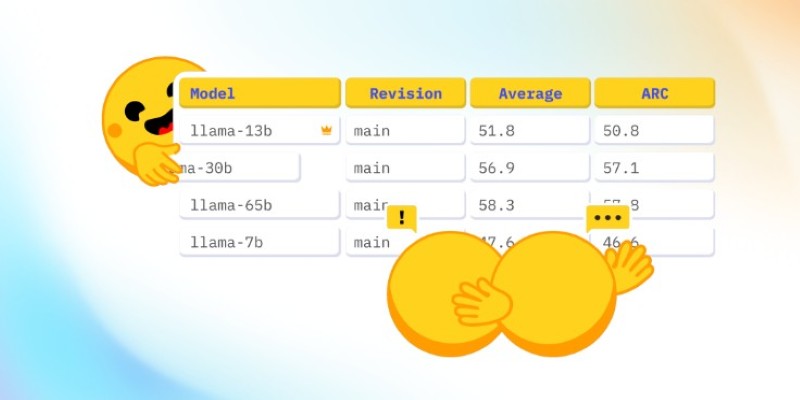

How CO₂ emissions and models performance intersect through data from the Open LLM Leaderboard. Learn how efficiency and sustainability influence modern AI development

Discover how ChatGPT can enhance your workday productivity with practical uses like summarizing emails, writing reports, brainstorming, and automating daily tasks

A top Korean telecom investment in Anthropic AI marks a major move toward ethical, global, and innovative AI development

From the legal power of emojis to the growing threat of cyberattacks like the Activision hack, and the job impact of ChatGPT AI, this article breaks down how digital change is reshaping real-world consequences

What is the AI alignment control problem, and why does it matter? Learn how AI safety, machine learning ethics, and the future of superintelligent systems all depend on solving this growing issue

How self-speculative decoding improves faster text generation by reducing latency and computational cost in language models without sacrificing accuracy

Oracle adds generative AI to Fusion CX, enhancing customer experience with smarter and personalized business interactions

OpenRAIL introduces a new standard in AI development by combining open access with responsible use. Explore how this licensing framework supports ethical and transparent model sharing