Advertisement

If you’ve ever typed a question into ChatGPT and gotten a helpful response, then you’ve already seen prompt engineering in action. It’s not about coding from scratch. Instead, it’s about knowing what to say and how to say it to get useful answers from AI systems like GPT-4 or Claude. AI prompt engineering sits at the intersection of language, logic, and problem-solving.

It's not a job that existed five years ago, yet it's now listed in tech job boards, with some companies offering six-figure salaries for top talent. That raises an honest question: Is AI prompt engineering just a trend, or is it the start of something long-term?

An AI prompt engineer writes, tests, and refines inputs (called prompts) for language models. These models don’t “think” in the human sense. They react to instructions. How you phrase a prompt determines how well the model performs. If you're asking it to summarize a legal document, write Python code, or explain biology to a child, the structure and clarity of the prompt can make or break the output.

Prompt engineers develop systems of instructions that guide these models to consistently generate accurate, relevant, or creative results. Some write hundreds of versions of the same query to figure out which phrasing works best. In larger teams, they may document workflows, build libraries of reusable prompts, or work alongside software engineers to embed prompt chains into apps or chatbots. The job rewards people who understand both language nuance and model behavior.

It’s a lot like talking to a very literal genius. You need to know the right tone, timing, and phrasing to unlock the best response. Prompt engineering isn’t about making the AI smarter—it’s about making your input smart enough to match what the AI can do.

As more businesses adopt AI tools, they quickly learn that raw output isn’t always reliable. A financial report may sound polished but contain mistakes. A marketing plan might be on-brand but factually off. Prompt engineers reduce these risks. They know how to guide the AI, spot gaps in output, and improve accuracy without needing to retrain the model.

This matters because many companies don’t have the time or budget to build custom AI from scratch. Instead, they use existing models like ChatGPT or Claude and apply prompt strategies to shape the results. A skilled prompt engineer helps them do that faster and more reliably.

The demand is growing in areas like healthcare, legal tech, education, finance, marketing, software testing, and customer support. A hospital may want a safe way to summarize patient records. A law firm may need precise case summaries. A startup may want chatbots to act like human assistants. In all these cases, a good prompt engineer becomes a force multiplier.

That said, the job still varies widely. Some roles expect deep technical skills like scripting and API integration. Others focus more on writing, testing, and iterating prompts within no-code environments. Some are contract-based, others full-time. The definition of the role depends heavily on the company's needs, and that's where the career question gets more complex.

The short answer: not yet—but it’s heading in that direction.

AI prompt engineering is still in its early phase. Like early web development or SEO in the 2000s, it’s a field with high curiosity, growing interest, and no fixed playbook. Some companies hire dedicated prompt engineers. Others train existing staff to write better prompts. There’s no formal degree or universal certification. Most people entering this space come from writing, data science, software engineering, or product design.

Because the field is new, salaries vary a lot. Some jobs pay over $200,000 per year, but those are outliers with high technical demands. More often, prompt engineering is part of a hybrid role. A marketing analyst might also handle prompt design. A customer service manager might fine-tune chatbot prompts. That’s why it’s hard to say whether prompt engineering alone will remain a job title five years from now. But the skill itself? That’s likely to stay relevant.

Large language models aren’t going away. If anything, they’re being integrated into more tools, platforms, and workflows. From Google Docs to enterprise dashboards, AI is showing up in the apps people use daily. And each of those use cases depends on well-designed prompts to make the AI usable, safe, and helpful.

As automation grows, companies will need humans who can act as interpreters—people who understand both how the model behaves and what users actually need. That’s what prompt engineers do. Even if the title fades, the function is sticking around.

If you're looking at this as a career path, it makes sense to combine prompt skills with another domain. For example, if you know finance and learn prompt engineering, you can guide AI in generating useful reports or audits. If you're in UX design, you can craft AI chat flows that feel natural and intuitive. Pairing prompt writing with a second skill makes your position much harder to replace.

There’s also room for specialization. Some prompt engineers focus on safety and bias reduction. Others specialize in performance tuning or localization. And some build internal tools that let non-engineers use prompt libraries effectively.

However, long-term stability will likely depend on whether the role becomes institutionalized—added to hiring plans, measured with KPIs, and supported with training. Right now, it's still floating between novelty and necessity.

AI prompt engineering isn’t a passing trend—it’s a developing skill set that bridges the gap between human goals and machine output. While the job title might shift or blend into other roles, the core ability to guide language models with effective prompts will remain useful. As more businesses rely on AI tools, the demand for people who can fine-tune those tools through smart input will likely increase. Whether it stands alone as a job or becomes part of other tech roles, prompt engineering offers a practical, creative path for those who enjoy working with words and systems side by side.

Advertisement

Why teachers should embrace AI in the classroom. From saving time to personalized learning, discover how AI in education helps teachers and students succeed

Selecting the appropriate use case will help unlock AI potential. With smart generative AI tools, you can save money and time

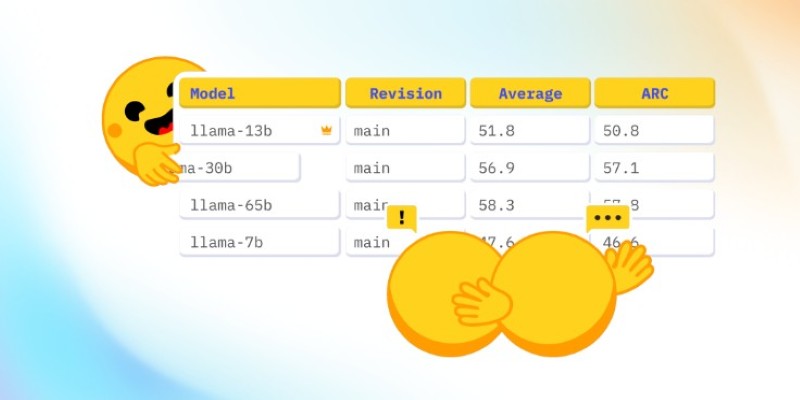

How CO₂ emissions and models performance intersect through data from the Open LLM Leaderboard. Learn how efficiency and sustainability influence modern AI development

Can AI finally speak your language fluently? Aya Expanse is reshaping how multilingual access is built into modern language models—without English at the center

Discover four simple ways generative AI boosts analyst productivity by automating tasks, insights, reporting, and forecasting

AI prompt engineering is becoming one of the most talked-about roles in tech. This guide explains what it is, what prompt engineers do, and whether it offers a stable AI career in today’s growing job market

Explore the journey from GPT-1 to GPT-4. Learn how OpenAI’s lan-guage models evolved, what sets each version apart, and how these changes shaped today’s AI tools

Learn 8 effective prompting techniques to improve your ChatGPT re-sponses. From clarity to context, these methods help you get more accurate AI an-swers

What Large Language Models (LLMs) are, how they work, and their impact on AI technologies. Learn about their applications, challenges, and future potential in natural language processing

Explore 5 real-world ways students are using ChatGPT in school to study better, write smarter, and manage their time. Simple, helpful uses for daily learning

What is the AI alignment control problem, and why does it matter? Learn how AI safety, machine learning ethics, and the future of superintelligent systems all depend on solving this growing issue

How inference providers on the Hub make AI deployment easier, faster, and more scalable. Discover services built to simplify model inference and boost performance