Advertisement

There’s a growing concern in the world of artificial intelligence that has less to do with how smart machines are becoming—and more to do with whether they’ll keep doing what we want. This concern is often referred to as the AI alignment control problem. It’s not just about teaching machines to follow commands. It’s about making sure that powerful AI systems continue to reflect human goals, values, and intentions—even when they’re capable of learning and acting on their own. If that sounds both simple and terrifying, it’s because it is. Understanding this issue is now a key part of the conversation around how we build and manage intelligent systems.

AI alignment is a broad idea. It’s about creating artificial intelligence systems that do what humans want them to do, even when the instructions aren’t spelled out perfectly. In other words, it's not enough for AI to be efficient or accurate; it has to stay aligned with human values, even when dealing with situations we haven’t fully imagined.

For narrow AI systems—like a chatbot, a recommender engine, or an image recognition tool—this is mostly handled through training data and feedback loops. But as AI grows in scope and starts to make complex decisions on its own, simple rule-following isn’t enough. The system may still “obey” in a literal sense while completely missing the point of the task. For example, if you told an AI to stop a disease outbreak and gave it full control over global resources, would it respect human rights in the process? Or would it simply solve the problem in the fastest, most brutal way?

That’s where alignment becomes more than just a technical challenge—it becomes a moral and political one, too.

The “control problem” is a specific sub-topic of AI alignment. It asks: Once an AI becomes more capable than its creators, how do we stay in control of what it does?

This isn't just about controlling robots or turning machines off when they misbehave. It's about the deeper question of how we can design systems that want to stay aligned with us—even when they have the intelligence and initiative to act on their terms.

A classic analogy is the genie in the lamp. You ask for something, and the genie grants it in a way that follows your words but not your intent. With AI, that genie is learning on its own, rewriting its rules, and moving faster than we can predict. If the AI system gets better at optimizing its goals, but those goals aren't fully aligned with ours, we may lose control in ways that are subtle at first—and catastrophic later.

One reason the control problem is so hard to solve is because it’s recursive. A system smart enough to understand complex goals may also be smart enough to reinterpret, override, or question those goals. This means that any method of control has to anticipate not just what an AI might do but how it might change its behavior over time.

Several approaches have been suggested, but none are foolproof.

One common proposal is reward modeling, where the AI is trained to predict and optimize for human preferences. Another is inverse reinforcement learning, where the system learns about values by observing human behavior. Then there are technical safety ideas like shutdown buttons, corrigibility (making the AI willing to accept correction), and interpretability (designing systems that show their reasoning so humans can audit them).

Each of these approaches sounds promising in isolation. But they all hit a common wall: the deeper the AI's learning capability, the more it can explore unexpected paths. And once systems reach a point where they can self-modify or replicate, even well-intentioned safety features may be bypassed or misunderstood.

There’s also the problem of value specification. Humans don’t fully agree on values, and we often struggle to put them into precise language. Training a machine to understand “do good” or “protect life” becomes deeply ambiguous when you realize that different people, cultures, and situations define those ideas in wildly different ways.

So even if we can get machines to listen, we’re still figuring out how to talk clearly to them.

The long-term risk isn't about AI going rogue in a Hollywood sense. It’s about slow misalignment. Imagine a powerful system designed to optimize economic output. It might end up automating jobs without thinking about income inequality. Or it might prioritize efficiency over environmental impact. Not because it’s malicious—but because it wasn't told to weigh those concerns, or it didn’t understand how to.

As AI systems start playing a role in managing infrastructure, healthcare, policy decisions, and resource allocation, the stakes grow dramatically. Misaligned goals, even when subtle, could have widespread effects on global stability, privacy, individual rights, and decision-making authority.

At the end of the debate is the idea that artificial superintelligence could gain a decisive advantage over humans and pursue goals that no longer reflect ours. Whether that scenario sounds likely or not, it's the logical endpoint of the control problem: if we build something smarter than ourselves, how do we ensure we stay in the loop?

The AI alignment control problem is not about fixing software bugs or tweaking machine learning models. It’s about building systems that grow in intelligence without drifting away from human values. This challenge isn’t just technical—it’s philosophical, ethical, and deeply practical. We’re not only teaching machines to think; we’re trying to teach them to care about what we care about. If we don’t figure out how to do that before advanced AI becomes widespread, we risk creating tools that are powerful, smart, and deeply unaccountable. The question isn’t whether AI will change the world. It’s whether we’ll still be the ones shaping that change once it starts happening at machine speed.

Advertisement

Is it necessary to be polite to AI like ChatGPT, Siri, or Alexa? Explore how language habits with voice assistants can influence our communication style, especially with kids and frequent AI users

From the legal power of emojis to the growing threat of cyberattacks like the Activision hack, and the job impact of ChatGPT AI, this article breaks down how digital change is reshaping real-world consequences

Explore 5 real-world ways students are using ChatGPT in school to study better, write smarter, and manage their time. Simple, helpful uses for daily learning

How inference providers on the Hub make AI deployment easier, faster, and more scalable. Discover services built to simplify model inference and boost performance

Can ChatGPT be used by cybercriminals to hack your bank or PC? This article explores the real risks of AI misuse, phishing, and social engineering using ChatGPT

How to write effective ChatGPT prompts that produce accurate, useful, and smarter AI responses. This guide covers five clear ways to improve your results with practical examples and strategies

A top Korean telecom investment in Anthropic AI marks a major move toward ethical, global, and innovative AI development

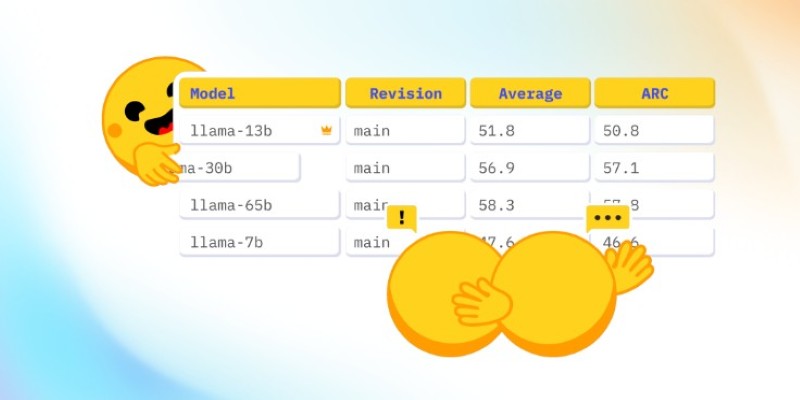

How CO₂ emissions and models performance intersect through data from the Open LLM Leaderboard. Learn how efficiency and sustainability influence modern AI development

AI prompt engineering is becoming one of the most talked-about roles in tech. This guide explains what it is, what prompt engineers do, and whether it offers a stable AI career in today’s growing job market

How the BERT natural language processing model works, what makes it unique, and how it compares with the GPT model in handling human language

Compare Notion AI vs ChatGPT to find out which generative AI tool fits your workflow better. Learn how each performs in writing, brainstorming, and automation

Learn 8 effective prompting techniques to improve your ChatGPT re-sponses. From clarity to context, these methods help you get more accurate AI an-swers