Advertisement

Language is complicated, messy, and full of hidden meanings. Teaching machines to understand it isn't easy. That’s where models like BERT and GPT come in. These are two of the most influential breakthroughs in natural language processing (NLP), developed by Google and OpenAI. Both models are built on a transformer architecture, but they go about understanding language in different ways.

While BERT reads in two directions to make sense of context, GPT generates language step by step like a storyteller. This article explains how the BERT natural language processing model works, how it differs from the GPT model, and why both matter.

BERT, short for Bidirectional Encoder Representations from Transformers, was introduced by Google in 2018. It marked a shift in how machines process language. Instead of just reading a sentence left-to-right or right-to-left, BERT looks at the words on both sides of a target word simultaneously. This bidirectional reading allows it to capture the deeper context and relationship between words.

At the core of the BERT natural language processing model is the transformer encoder. BERT is trained using two main strategies. The first is Masked Language Modeling (MLM). Here, random words in a sentence are masked, and BERT is tasked with predicting them. This forces the model to understand the full context so that it can be guessed accurately. The second is Next Sentence Prediction (NSP), which enables the model to learn about how sentences are connected. Presented with two sentences, BERT attempts to determine if the second logically follows the first.

BERT's design makes it ideal for tasks that require understanding the meaning behind words, like question answering, sentence classification, and name recognition. It doesn't generate text; instead, it analyzes and interprets it. BERT is especially good at pulling out the intent behind a user's query or recognizing subtle differences in sentence meaning.

GPT, which stands for Generative Pre-trained Transformer, comes from OpenAI and is designed with a different goal in mind. While BERT is an encoder-only model, GPT is a decoder-only model. This means GPT reads text in one direction—left to right—and focuses on producing language, not just understanding it.

The GPT model learns by trying to predict the next word in a sentence, given the previous ones. During training, it reads large amounts of text from the internet and gradually learns grammar, facts, reasoning patterns, and how language is structured. By stacking layers of transformers and using a method called causal attention, GPT develops a strong ability to write coherent and relevant responses.

Because of this design, GPT is widely used in tasks like content creation, summarization, dialogue systems, and coding assistance. It can write essays, generate creative fiction, simulate conversations, or answer complex questions. The model doesn’t just recognize language—it uses it, building sentence after sentence in a way that mirrors how humans write or speak.

One key feature of GPT is its adaptability. With techniques like few-shot, zero-shot, and fine-tuning, users can guide the GPT model toward specific behaviors or styles with minimal examples. That makes it more flexible in real-time applications where generation is the goal rather than analysis.

Though both models are based on the transformer architecture, their purposes and training methods set them apart.

BERT reads in both directions. This bidirectional approach helps it capture full context, which is useful for understanding. GPT reads in one direction, from left to right, which suits generation tasks where one word leads naturally to the next.

BERT uses masked language modeling and next sentence prediction, which are focused on comprehension. GPT is trained using next-word prediction, enabling it to learn how to continue sentences or write paragraphs from scratch.

BERT is an encoder-only model. GPT is decoder-only. This impacts how each model processes input and produces output. Encoders are better at understanding, decoders at generating.

The BERT natural language processing model is better for interpretation tasks, like classifying texts, finding entities, or extracting answers from documents. GPT is more useful when the task involves language production, like writing emails, generating stories, or conducting conversations.

BERT powers systems like Google Search, helping to better understand what users are really asking. GPT runs in tools like ChatGPT and Copilot, helping people write code, create content, or automate communication.

Despite their differences, the two models can be complementary. In fact, newer models try to combine the strengths of both, adding bidirectional understanding with generative capabilities. Hybrid designs or multitask training are opening the door to models that analyze and produce language with greater precision.

Both the BERT and GPT models belong to a broader shift in natural language processing brought about by the transformer architecture. Before transformers, models relied on RNNs and LSTMs, which struggled with long sentences and context.

The success of BERT changed how researchers and engineers approached NLP tasks. Suddenly, fine-tuning a general-purpose language model became more effective than training task-specific models from scratch. OpenAI’s GPT model pushed this further by showing that massive scale combined with simple training tasks could produce surprisingly capable systems.

As these models grow larger, the line between understanding and generation begins to blur. GPT-3 and GPT-4 can both interpret and produce text, while newer variants like T5 and BART blend encoder-decoder architectures for more flexible handling of NLP challenges. The field is evolving rapidly, and the future may involve unified models that do everything well, from understanding search queries to composing emails or diagnosing medical records.

The BERT natural language processing model changed how machines understand language. Its ability to read in both directions and absorb context made it ideal for comprehension-based tasks. On the other side, the GPT model brought a shift in how machines generate text, enabling AI to write and interact more fluidly. While they share the same foundational architecture, their goals, training methods, and applications are quite different. BERT is about meaning. GPT is about expression. Together, they represent two halves of a bigger story: how machines are starting to handle language with a depth and fluency that once seemed impossible.

Advertisement

Discover four simple ways generative AI boosts analyst productivity by automating tasks, insights, reporting, and forecasting

Learn 8 effective prompting techniques to improve your ChatGPT re-sponses. From clarity to context, these methods help you get more accurate AI an-swers

Discover 8 legitimate ways to make money using ChatGPT, from freelance writing to email marketing campaigns. Learn how to leverage AI to boost your income with these practical side gigs

Discover practical methods to sort a string in Python. Learn how to apply built-in tools, custom logic, and advanced sorting techniques for effective string manipulation in Python

OpenRAIL introduces a new standard in AI development by combining open access with responsible use. Explore how this licensing framework supports ethical and transparent model sharing

What Large Language Models (LLMs) are, how they work, and their impact on AI technologies. Learn about their applications, challenges, and future potential in natural language processing

Explore the journey from GPT-1 to GPT-4. Learn how OpenAI’s lan-guage models evolved, what sets each version apart, and how these changes shaped today’s AI tools

How inference providers on the Hub make AI deployment easier, faster, and more scalable. Discover services built to simplify model inference and boost performance

Curious about how Snapchat My AI vs. Bing Chat AI on Skype compares? This detailed breakdown shows 8 differences, from tone and features to privacy and real-time results

Is it necessary to be polite to AI like ChatGPT, Siri, or Alexa? Explore how language habits with voice assistants can influence our communication style, especially with kids and frequent AI users

Compare Notion AI vs ChatGPT to find out which generative AI tool fits your workflow better. Learn how each performs in writing, brainstorming, and automation

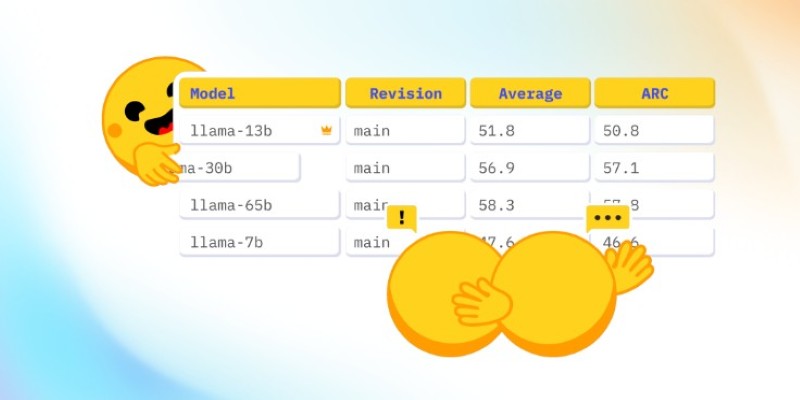

How CO₂ emissions and models performance intersect through data from the Open LLM Leaderboard. Learn how efficiency and sustainability influence modern AI development