Advertisement

If you've ever used ChatGPT and ended up with vague, messy, or just plain wrong answers, the issue likely wasn't the AI—it was the prompt how you ask matters. A small change in wording or adding one extra line of context can completely change the response you get. Most people treat it like a search bar and then wonder why the reply falls short.

But prompting isn’t about shouting into the void; it’s more like giving instructions to a helpful assistant who wants to do a good job but needs direction. Mastering a few prompting techniques can make a massive difference in the usefulness and accuracy of your chats.

One of the most common mistakes is being too casual. Instead of asking, “Write something about climate change,” say, “Write a 300-word blog post on the effects of climate change on coastal cities in Southeast Asia.” The more you assume the model will read between the lines, the less control you’ll have over what comes back. It doesn’t matter if something seems “implied”—clarity in your prompt directly shapes the final output. If you want a list, ask for a list. If you want a paragraph, say that. Precision cuts down on fluff and makes the AI stick closer to your intent.

Context sharpens responses. If you're asking ChatGPT to generate social media posts for your online bookstore, you’ll get a very different answer if you say, “I sell vintage science fiction novels to collectors aged 35-60,” versus no description at all. Want a medical answer tailored for a general audience? Say that. Need a tone that sounds informal and friendly? Mention it. AI doesn't guess well—it follows instructions. To improve your ChatGPT responses, you have to feed it the backstory, tone, audience, and format, just like you would when briefing a real assistant.

Asking for too much in one breath will often confuse the model. Instead of saying, "Make a marketing plan and write five ad variations and design a landing page layout," break it down. Start with, "Write a basic outline of a marketing plan for a meal kit service targeting young professionals in urban areas." Then, follow up with the next task. When ChatGPT handles one focused thing at a time, it performs better. This also helps you steer the conversation more easily and correct or fine-tune one part before moving on.

It's easy to think "long" or "detailed" is enough of a direction, but these words mean different things depending on the topic. It's better to say, "Limit to 500 words," or "Give three bullet points, no more than 20 words each." These instructions guide the model's structure and length. If you're summarizing, tell it what to include or leave out. If you want a fictional story with only dialogue, say so. When the boundaries are clear, the content quality improves. These are the kinds of prompting techniques that eliminate guesswork and lead to cleaner results.

If you're picky about tone or structure, showing is faster than explaining. Give an example, even a short one. Let’s say you want email subject lines that sound clever but not salesy. Add one you’ve already used and liked, and ask it to match that style. Or paste a sample tweet and ask for variations in the same tone. This is especially useful when you’re working with creative writing, jokes, social copy, or brand voice. The model responds well when it has something concrete to mirror.

You don't have to start from scratch each time. If you have a decent list but don't love the phrasing, say, "Keep the same ideas but rewrite in a more casual tone." If the summary was too shallow, say, "Add more depth and real-world examples to each point." This ongoing refinement is one of ChatGPT's biggest advantages. It's like having an intern who never tires of editing. You can improve your ChatGPT responses just by building from the last one instead of asking from zero every time.

Negative prompting can be just as useful as positive instructions. If you don’t want long introductions, repetitive language, or promotional language, say so. You might write, “Explain this without using technical jargon” or “Avoid clichés like ‘game-changer’ or ‘cutting-edge.’” This sets up guardrails. Think of it as telling the AI what roads to avoid while it navigates. It helps cut back on fluff, marketing speak, or stylistic choices you dislike. These boundaries let the model focus on what's useful and keep your final result from wandering off track.

If the reply is close but still not right, reframe the question. Instead of asking again the same way, shift the angle. For instance, if "Explain photosynthesis to a 10-year-old" gives you a boring result, try "Pretend you're a cartoon character explaining photosynthesis to kids." Or if "Summarize this article" feels flat, go with "Turn this article into a Twitter thread." Sometimes, the fix isn't in the detail but in the perspective. The AI's language model can reshape content effectively—but only if you steer it with the right lens.

Writing a good prompt isn’t just about wording. It’s about structuring a clear, well-defined request that leaves little to chance. These prompting techniques don’t require deep technical knowledge, just a bit of patience and some practice. Whether you’re writing content, summarizing articles, drafting code, or brainstorming ideas, these tweaks can drastically improve your ChatGPT responses. When the prompt is specific, grounded in context, and clear in scope, the results stop feeling robotic and start becoming helpful. Treat it less like a magic 8-ball and more like a conversation—and it will respond accordingly.

Advertisement

Oracle adds generative AI to Fusion CX, enhancing customer experience with smarter and personalized business interactions

Discover how ChatGPT can enhance your workday productivity with practical uses like summarizing emails, writing reports, brainstorming, and automating daily tasks

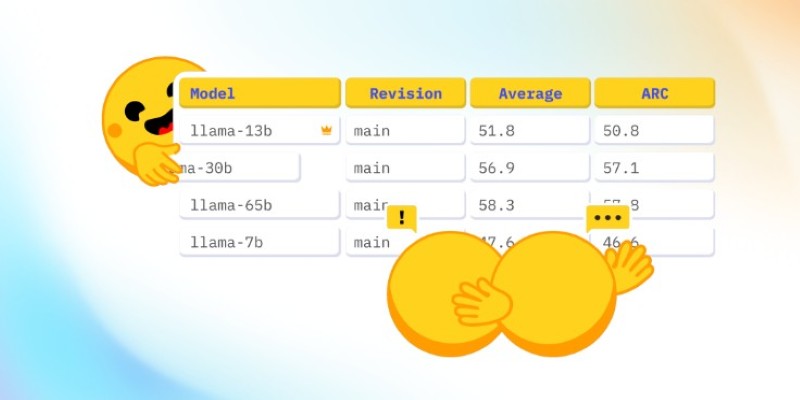

How CO₂ emissions and models performance intersect through data from the Open LLM Leaderboard. Learn how efficiency and sustainability influence modern AI development

Compare Notion AI vs ChatGPT to find out which generative AI tool fits your workflow better. Learn how each performs in writing, brainstorming, and automation

Can ChatGPT be used by cybercriminals to hack your bank or PC? This article explores the real risks of AI misuse, phishing, and social engineering using ChatGPT

How inference providers on the Hub make AI deployment easier, faster, and more scalable. Discover services built to simplify model inference and boost performance

OpenRAIL introduces a new standard in AI development by combining open access with responsible use. Explore how this licensing framework supports ethical and transparent model sharing

Is it necessary to be polite to AI like ChatGPT, Siri, or Alexa? Explore how language habits with voice assistants can influence our communication style, especially with kids and frequent AI users

Can AI finally speak your language fluently? Aya Expanse is reshaping how multilingual access is built into modern language models—without English at the center

Discover practical methods to sort a string in Python. Learn how to apply built-in tools, custom logic, and advanced sorting techniques for effective string manipulation in Python

Why teachers should embrace AI in the classroom. From saving time to personalized learning, discover how AI in education helps teachers and students succeed

How the BERT natural language processing model works, what makes it unique, and how it compares with the GPT model in handling human language