Advertisement

In open-source software, licenses have long helped define how code is shared, reused, and modified. But artificial intelligence brings added challenges. Models trained on large datasets can influence decisions, generate content, and impact lives in unexpected ways. Traditional licenses don’t account for these social and ethical dimensions.

OpenRAIL—short for Open Responsible AI Licenses—was created to bridge that gap. It aims to preserve the collaborative spirit of open source while setting clear expectations for responsible use. This approach gives developers more say in how their models are used after release and introduces much-needed accountability into the AI ecosystem.

AI development has become more accessible. Researchers and companies regularly release models to the public, encouraging collaboration and experimentation. But with that openness comes risk. AI systems can amplify bias, be used to deceive or end up in contexts their creators never imagined. Once a model is out, traditional licenses offer little recourse if it's misused.

OpenRAIL responds to this by introducing use-based conditions that go beyond code. It was developed by researchers and legal experts seeking a middle path between fully open models and tightly controlled proprietary systems. Instead of limiting access, OpenRAIL allows developers to define how their models should or shouldn’t be used—making responsible intent part of the licensing framework.

OpenRAIL licenses are modular, meaning developers can select clauses relevant to their model’s purpose. The idea is to enable openness while discouraging harmful or unethical uses. It's not about imposing rigid control but about setting expectations clearly and upfront.

OpenRAIL combines open access with specific behavioural restrictions. It still allows users to modify, share, and build upon AI models, but under terms that focus on responsible deployment. Unlike traditional licenses, it includes provisions about downstream use, making it unique in the open-source world.

One of the main features is the inclusion of acceptable and unacceptable use clauses. These typically cover areas like avoiding harm, upholding privacy, and not using models for discrimination, surveillance, or generating misleading content. The language is practical rather than theoretical, aiming to address real misuse scenarios seen in past model deployments.

Attribution is another key point. OpenRAIL requires users to credit the creators and cite the original model, helping preserve transparency as models evolve. This also helps downstream users track how a model has changed and whether it still aligns with its original goals.

The license is split into two parts. One part governs the model and accompanying resources—weights, documentation, and training code. The other part governs responsible use. The RAIL-M version is tailored to model checkpoints, while RAIL-A targets applications built using those models. Together, they cover most of what AI developers release today.

OpenRAIL avoids being too prescriptive. It’s not a blacklist of forbidden tasks but rather a framework to encourage thoughtful use. Developers can tweak the terms to suit their project—adding stricter clauses for sensitive use cases or using a lighter version for lower-risk models.

Licensing may seem like a back-office concern, but AI shapes how models move through the world. OpenRAIL gives creators more influence over what happens after a model is released. This can reduce the risk of reputational damage, legal exposure, or unintended social harm.

In practice, OpenRAIL is already being used by several high-profile projects. The BLOOM language model was one of the first major releases under an OpenRAIL license. It allowed open use while making it clear that the model shouldn’t be used for disinformation or surveillance. This clarity helped organizations adopt the model with confidence, knowing what the license permits and forbids.

Unlike code libraries, AI models aren’t just tools—they can make decisions, generate content, and influence public perception. So, the stakes are higher. OpenRAIL doesn’t block creativity, but it asks developers and users to consider the consequences of deployment.

By providing legal language that addresses behaviour, not just access, OpenRAIL creates a form of ethical infrastructure. It offers a middle ground between completely unrestricted licenses and full proprietary control. That flexibility is especially useful in a field evolving as fast as AI.

OpenRAIL also encourages a culture of responsibility. Developers are more likely to think about impact if they’re asked to define use cases before release. And users have clearer guidelines about what’s acceptable. Over time, this could shift industry norms toward more mindful practices.

OpenRAIL isn’t without limitations. One challenge is drawing lines around what counts as misuse. AI applications are wide-ranging, and a restriction that makes sense in one context might block innovation in another. The license must remain adaptable while staying enforceable.

There’s also the question of enforcement. Licensing terms are only useful if violations can be identified and addressed. In practice, misuse can happen anonymously or in jurisdictions where enforcement is complicated. Still, OpenRAIL provides a legal foundation that can support actions against clear violations, something most traditional licenses lack.

Some in the open-source community raise concerns about whether OpenRAIL fits the “free software” model, which traditionally supports use for any purpose. OpenRAIL’s restrictions challenge that principle, but its creators argue that AI is fundamentally different. Given its potential for harm, they see these added terms not as censorship but as necessary safeguards.

To address these concerns, OpenRAIL licenses are intentionally flexible. Developers can choose what restrictions to include or modify the text to better fit their needs. This makes the license useful across different sectors, from academic research to commercial applications.

As AI grows, licensing frameworks like OpenRAIL may become standard for responsible model release. They keep innovation open while acknowledging AI’s real-world impact. If enough creators adopt and adapt OpenRAIL, it could become a quiet baseline in the AI community.

OpenRAIL allows developers to share AI models openly while setting boundaries to prevent misuse. It brings responsible use into the licensing process, helping creators influence how their models are applied. While it won’t solve every ethical challenge, it introduces much-needed accountability into open-source AI. By combining flexibility with clear terms, OpenRAIL supports innovation without giving up control—a practical step as AI tools become more widely used and impactful.

Advertisement

How the BERT natural language processing model works, what makes it unique, and how it compares with the GPT model in handling human language

Selecting the appropriate use case will help unlock AI potential. With smart generative AI tools, you can save money and time

Discover 8 legitimate ways to make money using ChatGPT, from freelance writing to email marketing campaigns. Learn how to leverage AI to boost your income with these practical side gigs

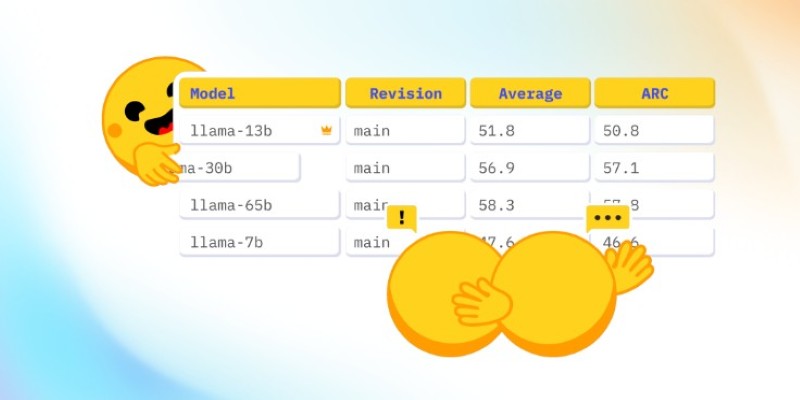

How CO₂ emissions and models performance intersect through data from the Open LLM Leaderboard. Learn how efficiency and sustainability influence modern AI development

From the legal power of emojis to the growing threat of cyberattacks like the Activision hack, and the job impact of ChatGPT AI, this article breaks down how digital change is reshaping real-world consequences

Explore 5 real-world ways students are using ChatGPT in school to study better, write smarter, and manage their time. Simple, helpful uses for daily learning

What DAX in Power BI is, why it matters, and how to use it effectively. Discover its benefits and the steps to apply Power BI DAX functions for better data analysis

A top Korean telecom investment in Anthropic AI marks a major move toward ethical, global, and innovative AI development

Oracle adds generative AI to Fusion CX, enhancing customer experience with smarter and personalized business interactions

What is the AI alignment control problem, and why does it matter? Learn how AI safety, machine learning ethics, and the future of superintelligent systems all depend on solving this growing issue

Curious about how Snapchat My AI vs. Bing Chat AI on Skype compares? This detailed breakdown shows 8 differences, from tone and features to privacy and real-time results

Is ChatGPT a threat to search engines, or is it simply changing how we look for answers? Explore how AI is reshaping online search behavior and what that means for traditional engines like Google and Bing