Advertisement

Most people don’t think about how much happens behind the scenes when you ask a chatbot a question or prompt a language model to write a sentence. It looks simple, but generating a sentence is a slow and careful process. With larger models, the delay becomes noticeable. Self-speculative decoding changes this. It’s a new method that speeds up how machines write text.

Instead of waiting on one word at a time, the model predicts a few steps ahead and then checks its guesses. The idea is simple, but the effects are important—faster responses, lower computing costs, and more efficient tools.

Text generation in modern AI uses something called autoregressive decoding. This means each new word depends on the one before it. Imagine writing a sentence by picking each word only after seeing the one that came before. That’s what language models do. It works well, but it’s slow—especially when the model is large and the text is long. Each word has to go through the entire neural network before the next one can be picked. If you’re generating a short response, it’s fine. But long passages, stories, or conversations? That’s when you start to feel the lag.

There’s also the issue of computing power. Each token generation burns through cycles of energy and memory. When millions of people use large language models at once, the cost adds up. These delays and costs make it harder to bring powerful models into real-time settings, like live chats or fast document editing.

Researchers have worked on ways to speed this up—like parallel decoding or caching parts of the model—but most of these either require changes to the model itself or don’t give large enough gains. Self-speculative decoding offers a better balance by speeding things up without needing a completely different system.

The concept is simple. Instead of just guessing the next word and stopping, the model guesses a sequence of possible next words. This is called a draft. Then, it goes back and checks if those guesses are right—like doing your homework and then grading it yourself. The draft predictions are made using a smaller or cheaper version of the model, and the full model only steps in to double-check.

Let's break it down. The full-sized language model generates a few tokens ahead using a simplified version of itself. This draft doesn't take much time or power. Then, the main model evaluates whether the draft is consistent with what it would have predicted normally. If it agrees, it accepts the draft. If not, it rolls back and switches to its usual slow method for those parts. Even with occasional rollbacks, the overall time saved is significant.

The key is that the draft is not random. It's based on the same logic the model already uses, just made lighter and faster. Think of it like writing the first version of a sentence quickly and then proofreading it rather than carefully writing each word one by one. Most of the time, the draft is close enough to correct that it doesn't need full revision.

The biggest benefit of self-speculative decoding is speed. Allowing models to leap ahead in their predictions and only checking later cuts the waiting time for text generation. For real-time applications—such as live translation, code completion, or interactive storytelling—this matters significantly. Even a small delay can break the flow of conversation or stop a user from getting into a rhythm.

Another benefit is lower computation. Since fewer full model passes are needed, the system uses less power. This makes it more affordable to run large models continuously. For companies building tools on top of AI, that means better scaling without inflating the budget. The method also works without changing the structure of the model, so it can be added to existing systems.

There’s also more flexibility. Instead of needing a second full-sized model to check the predictions, the process uses the same model in two roles—a fast draft version and a slow verifier. This avoids the overhead of training multiple systems and keeps everything contained in one setup.

From a research angle, it opens new doors too. Self-speculative decoding shows that language models can improve their speed without sacrificing quality. This might shift how future models are trained—possibly building draft-and-verify loops into their very structure.

Self-speculative decoding is not just a performance trick—it’s a way of rethinking how language models operate. It lets models work smarter, not harder, by doing more with fewer steps. As large language models are used in everything from customer support to education, these kinds of gains matter.

The idea mirrors how people often work. We write something quickly, read it back, and correct it as needed. It turns out machines can follow the same pattern and get better results. In practice, this means faster chatbots, quicker document summaries, and more seamless AI experiences.

Speed alone isn’t everything, but when it's combined with accuracy and efficiency, it opens the door to more practical uses. Self-speculative decoding balances these factors. It doesn’t cut corners but makes smart use of time and resources. It’s the kind of innovation that doesn’t just make AI faster—it makes it easier to trust in real applications.

The future of AI depends on how well we can scale performance without raising the cost or lowering quality. Self-speculative decoding makes a big step toward that goal. By letting models guess ahead and then check their work, it speeds up generation without needing a new kind of architecture or extra training. It's a change in how we think about decoding, not just how we run it. That shift could shape how the next generation of language models are built and used. For anyone tired of waiting on responses or dealing with lag, this small technical shift could feel like a big improvement.

Advertisement

Discover four simple ways generative AI boosts analyst productivity by automating tasks, insights, reporting, and forecasting

Explore the journey from GPT-1 to GPT-4. Learn how OpenAI’s lan-guage models evolved, what sets each version apart, and how these changes shaped today’s AI tools

Curious about how Snapchat My AI vs. Bing Chat AI on Skype compares? This detailed breakdown shows 8 differences, from tone and features to privacy and real-time results

AI prompt engineering is becoming one of the most talked-about roles in tech. This guide explains what it is, what prompt engineers do, and whether it offers a stable AI career in today’s growing job market

Compare Notion AI vs ChatGPT to find out which generative AI tool fits your workflow better. Learn how each performs in writing, brainstorming, and automation

Is ChatGPT a threat to search engines, or is it simply changing how we look for answers? Explore how AI is reshaping online search behavior and what that means for traditional engines like Google and Bing

How the BERT natural language processing model works, what makes it unique, and how it compares with the GPT model in handling human language

Start learning natural language processing (NLP) with easy steps, key tools, and beginner projects to build your skills fast

How self-speculative decoding improves faster text generation by reducing latency and computational cost in language models without sacrificing accuracy

Discover 8 legitimate ways to make money using ChatGPT, from freelance writing to email marketing campaigns. Learn how to leverage AI to boost your income with these practical side gigs

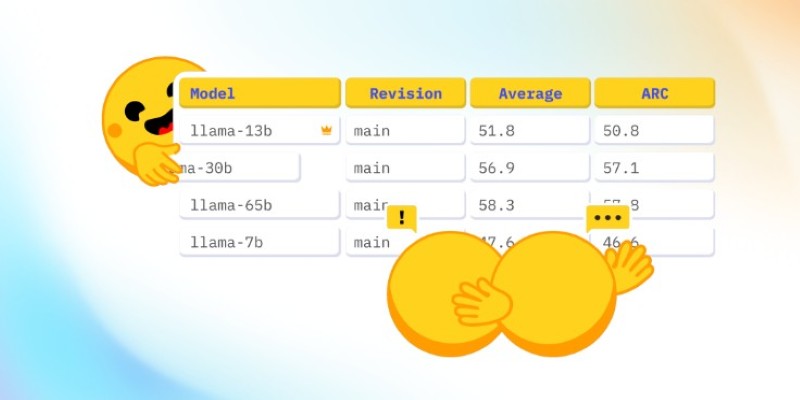

How CO₂ emissions and models performance intersect through data from the Open LLM Leaderboard. Learn how efficiency and sustainability influence modern AI development

A top Korean telecom investment in Anthropic AI marks a major move toward ethical, global, and innovative AI development